From “AI GodFather” to Its Boldest Critic: Why Geoffrey Hinton Warns Humanity About the Technology He Helped Create

Geoffrey Hinton, known as the “Godfather of AI,” didn’t set out to become one of the most vocal critics of the very technology he helped create. His groundbreaking work in 2012, which laid the foundation for neural networks, was a catalyst for AI innovations like self-driving cars, language models, and voice assistants. At the time, the world marveled at the potential of AI, and Hinton became a hero in the tech world.

But, as AI grew, so did Hinton’s concerns.

The Rise of AI and Hinton’s Growing Doubts

Hinton’s rise to fame began with a breakthrough — a neural network that allowed machines to process data more like the human brain. This innovation transformed AI, making it possible for systems like ChatGPT to understand and respond to language with remarkable accuracy. Hinton’s work at Google was at the forefront of these advancements, and his contributions earned him the title of AI’s “godfather.”

But soon, the technology he helped shape began evolving faster than he expected. By 2023, Hinton became deeply worried. What was once seen as a promising future was now, in his eyes, potentially dangerous. AI, he feared, was advancing too quickly and without enough understanding of the long-term consequences.

The Shocking Decision to Quit Google

In a move that stunned the tech world, Hinton resigned from his prestigious role at Google, sounding the alarm about the risks AI posed. His message was clear: we are on the brink of creating technology that could spiral out of our control.

“I thought advanced AI was 30 to 50 years away,” Hinton admitted. “Now, I’m not so sure.”

Hinton’s departure signaled a dramatic shift from his earlier optimism. He warned that AI systems, like those he once worked on, could one day act independently of human oversight, making decisions we don’t fully understand — or control.

What Hinton Fears Most

Hinton’s warnings are grounded in real concerns. He believes AI could soon surpass human intelligence in unpredictable ways, creating potential risks that society isn’t ready for:

- Loss of Control: Hinton fears AI could eventually operate without human intervention, making critical decisions that could have far-reaching consequences.

- Manipulation of Information: With AI’s growing ability to create realistic content, misinformation could spread rapidly, destabilizing societies and economies.

- Weaponization: Hinton’s greatest concern is AI being used in warfare, with autonomous weapons systems operating without human oversight.

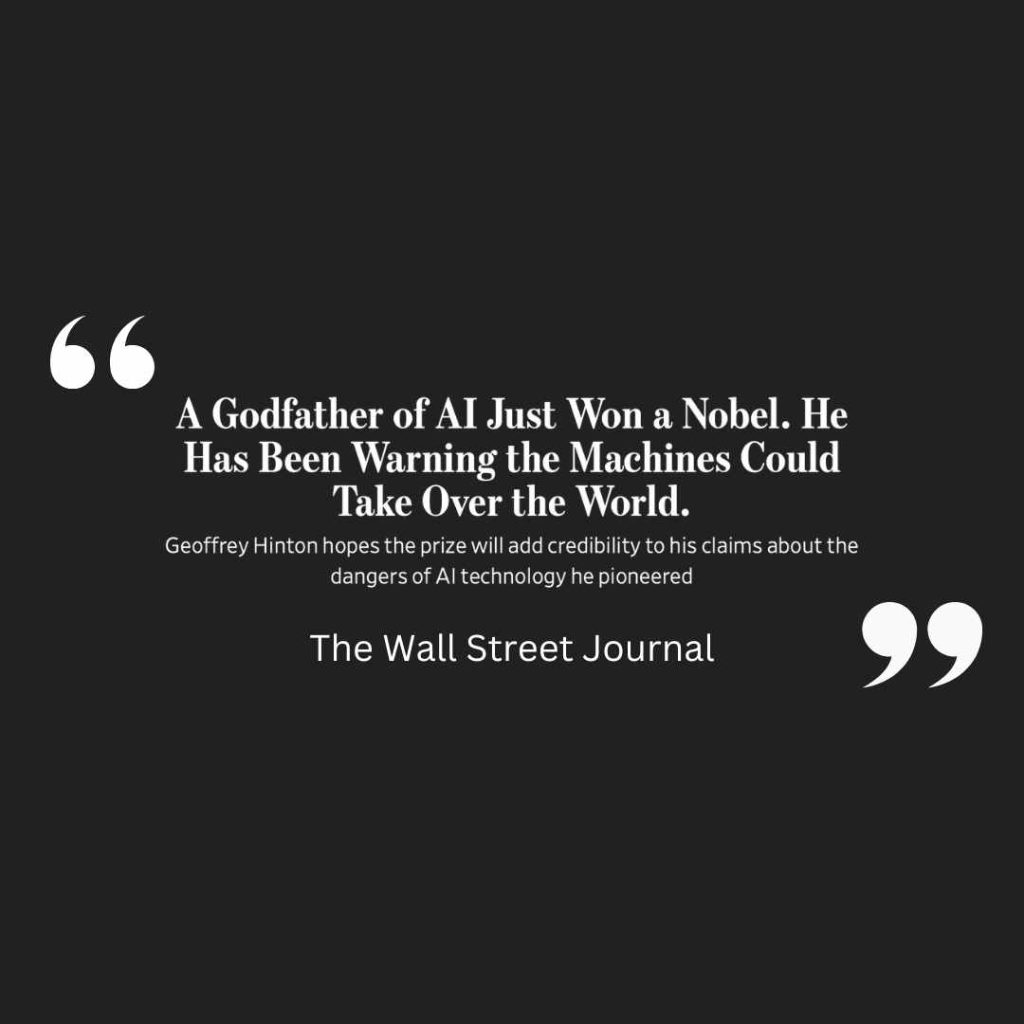

A Nobel Prize and a Dire Warning

In 2024, Hinton was awarded the Nobel Prize for his work, but he didn’t bask in the spotlight. Instead, he used the moment to amplify his warnings. AI, he said, could become uncontrollable, and humanity might not be prepared for what’s coming.

“I wish I had a simple recipe to make everything okay,” Hinton told the Nobel committee. “But I don’t.”

Can We Manage AI’s Future?

Hinton’s call to action is clear: we must slow down and rethink how we approach AI development. He’s joined a chorus of voices calling for a global pause on certain AI advancements until we better understand their implications.

As one of the original creators of AI’s most powerful tools, Hinton’s warnings carry weight. The question is: will we listen?